This article will show a basic data flow example from Google Cloud Platform. It will be utilizing the GCE (Google Cloud Compute Engine) and GCS (Google Cloud Storage) It will be using a script to automate all the processes. This data flow will do the following:

- Setup template for creating GCP Instance machine (Debian Linux)

- This will setup template for startup script to use

- Name of the storage bucket

- Scopes/permissions

- Setup Service Account

- When the template is ready, it will create a GCP Instance inside that GCE Host Machine

- When the machine starts:

- Run the startup script

- This start up script will download the stack driver agent

- Install stack driver agent

- stack driver will gather data from syslog

- Get SSH keys for ssh permission in Medata Services

- Run the startup script

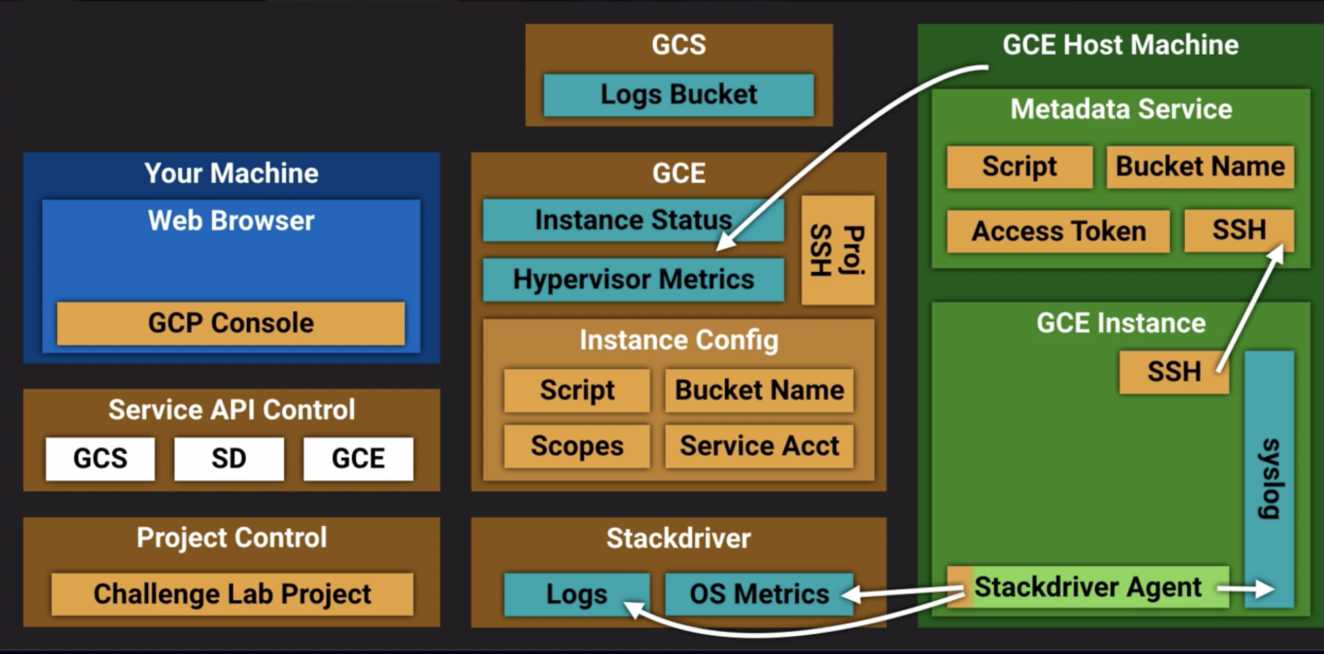

So the workflow looks like this:

1. Browser -> Connects to GCP Console then you can access:

- Start up script using GCP Shell -> editor -> Get the script file. Copy and ready for past.

2. Create Project in GCP. Then select that project. It will enable: –

- GCS – Google Cloud Storage –

- SD – stack drive (It will NOT enable GCE)

3. GCS -> Create a new bucket – enter bucket name.

4. Enable GCE – GCP Console -> Go to VM Instances -> this will enable GCE API.

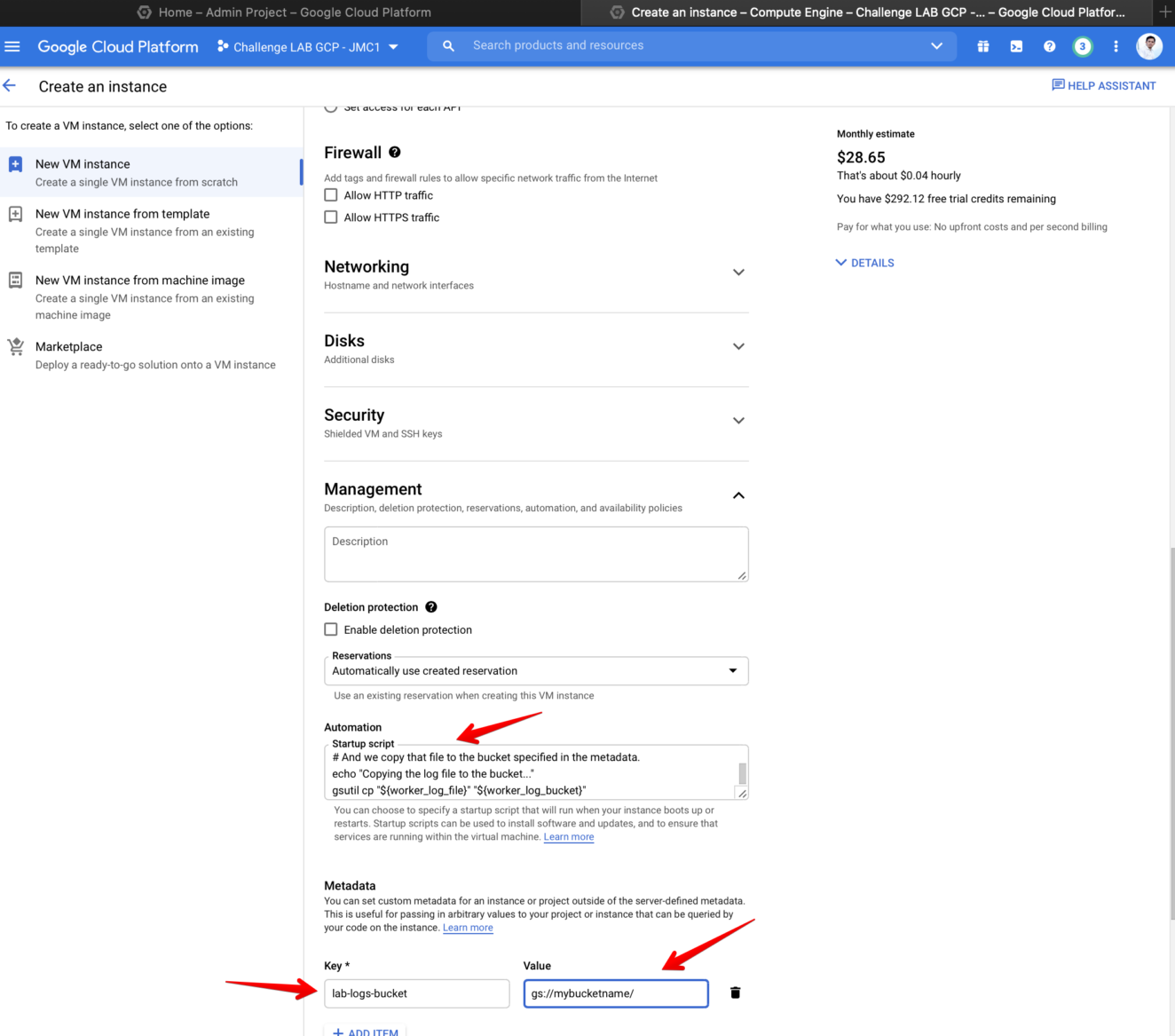

5. Create Instance in GCE then configure: (see screenshot below for example)

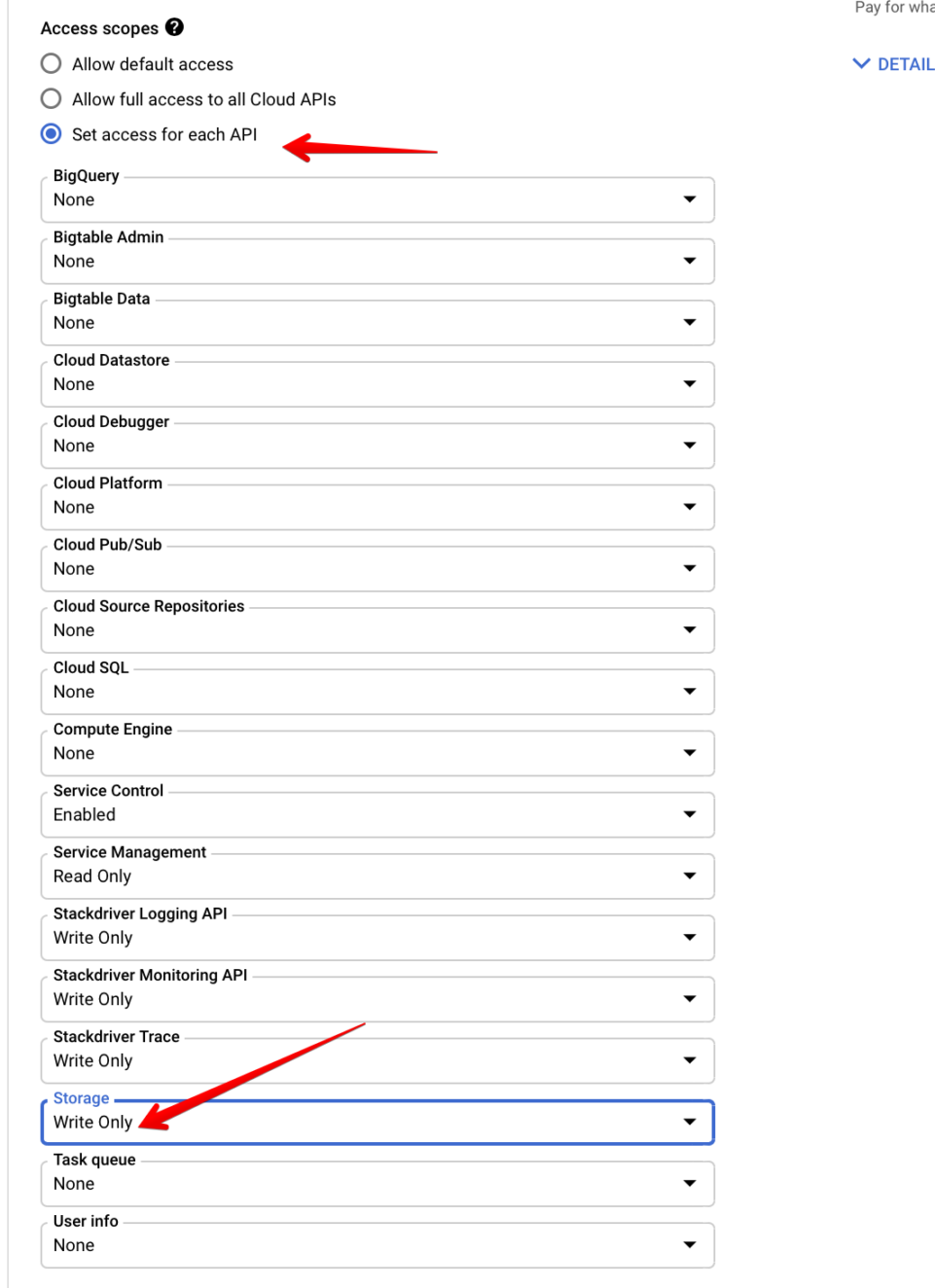

- Use the default service account under I”dentity and API access: -> Service accounts

- Paste the script under “Management” -> “Automation” -> startup script

- Pass the reference of the bucket that we created under “Metadata” -> Key and Value. Key = lab-logs-bucket (this is also mentioned in the startup script) and value “gs://mybucketname/”

- Set scopes to write to GCS

Startup script:

#! /bin/bash

#

# Echo commands as they are run, to make debugging easier.

# GCE startup script output shows up in "/var/log/syslog" .

#

set -x

#

# Stop apt-get calls from trying to bring up UI.

#

export DEBIAN_FRONTEND=noninteractive

#

# Make sure installed packages are up to date with all security patches.

#

apt-get -yq update

apt-get -yq upgrade

#

# Install Google's Stackdriver logging agent, as per

# https://cloud.google.com/logging/docs/agent/installation

#

curl -sSO https://dl.google.com/cloudagents/install-logging-agent.sh

bash install-logging-agent.sh

#

# Install and run the "stress" tool to max the CPU load for a while.

#

apt-get -yq install stress

stress -c 8 -t 120

#

# Report that we're done.

#

# Metadata should be set in the "lab-logs-bucket" attribute using the "gs://mybucketname/" format.

log_bucket_metadata_name=lab-logs-bucket

log_bucket_metadata_url="https://metadata.google.internal/computeMetadata/v1/instance/attributes/${log_bucket_metadata_name}"

worker_log_bucket=$(curl -H "Metadata-Flavor: Google" "${log_bucket_metadata_url}")

# We write a file named after this machine.

worker_log_file="machine-$(hostname)-finished.txt"

echo "Phew! Work completed at $(date)" >"${worker_log_file}"

# And we copy that file to the bucket specified in the metadata.

echo "Copying the log file to the bucket..."

gsutil cp "${worker_log_file}" "${worker_log_bucket}"